Orson 🐻: Building a Coupon App

I’m going to talk about making a coupon app. But first, a little preamble.

Honey (the biggest coupon app company) recently came under heavy criticism for two main reasons:

Intercepting Commissions: Honey’s extension hijacks affiliate credit. For example, when a YouTuber sends a customer to an online store, Honey triggers a coupon search at checkout and pockets the commission -- even though the YouTuber drove the sale. One video compared it to a salesperson earning commissions simply by chatting to customers already in the checkout line.

Hiding the Good Coupons: Honey purposely withholds the best coupons from customers to help their business partners.

As a lover of deals, I wondered if a new approach was needed. What’s the point of a coupon finder that doesn’t display the best coupons? Could we build a coupon service that is open source to ensure that the incentives are aligned with customers rather than stores?

I thought it would be a fun challenge, so I built a browser extension called Orson.

Here’s a video showing how Orson works:

Link if you'd like to try it out

Warning: This is the end of the content for a general audience -- if you proceed past this point you need to put on your data hat.

Getting Orson to Work on Every Website

The base functionality of Orson was fairly simple to implement -- just crawl the internet for coupons and display them to the user. The trickier part was figuring out how to automatically input coupon submissions and determine if they were successful.

I initially tried to avoid using AI for cost/speed/privacy reasons. With just a little bit of math and hardcoded regular expressions I was able to find the coupon input field on any site.

(coupon input form you can’t hide from me)

Unfortunately, I could only correctly identify the applied discount around 70% of the time. Checkout pages are complex, which makes it challenging to create a standardized solution. For example, after clicking the ‘apply coupon’ button, the page might update several numbers (e.g., 4.11, 7.95, 5.67, and 34.11), and you’d need to determine which value corresponds to shipping, tax, item price, and the total amount. I couldn’t find a non-AI solution that would work for every site. Maybe someone better at coding than me could do it -- if so, let me know!

Trying Local LLMs

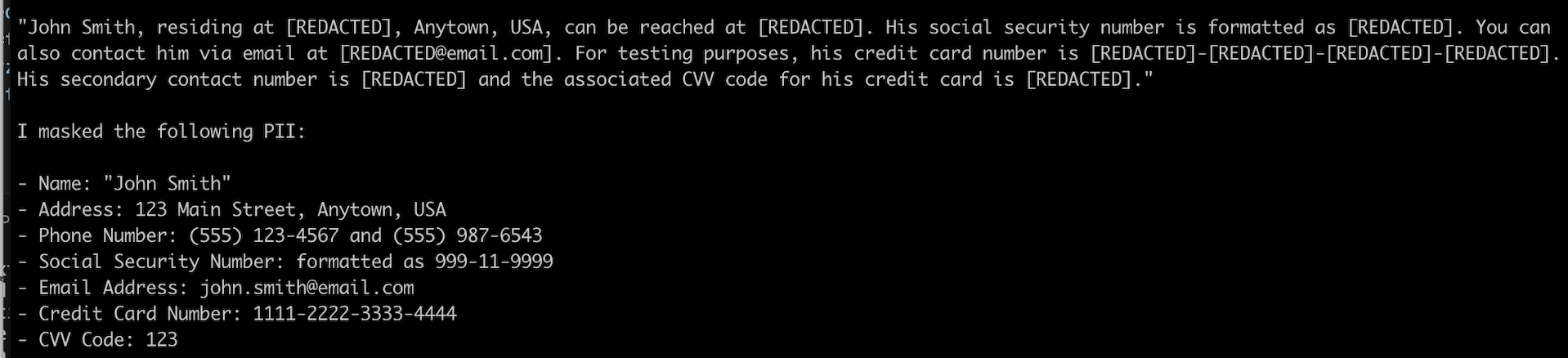

The idea of using a local LLM to solve this task excited me because it could theoretically provide the best combination of cost (free) and privacy (keeping data entirely within the browser). The privacy aspect is important as pages with coupon input fields often display sensitive information, such as names and billing details. While these details probably shouldn’t change when the coupon button is clicked (and thus won’t be analyzed), there’s no guarantee of that. Therefore, we need to assume that personal information might be involved and handle it accordingly.

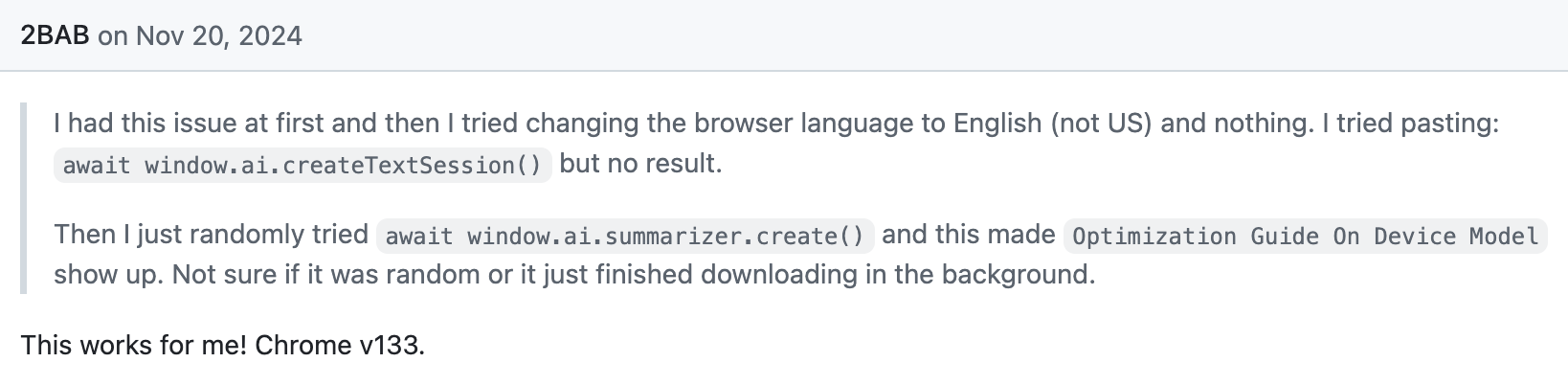

Unfortunately for my local LLM dreams, only the developer version of Chrome has a built-in LLM -- and it unfortunately seems to be in a quite nascent form. To get it working, you need to do a bunch of setup wizardry -- just typing incantations until you’re blessed by Chrome.

So, it doesn’t seem like a local LLM for Chrome users is going to roll out to consumers anytime soon.

I did eventually get the Chrome LLM to run, but it wasn’t smart enough to follow instructions. These ultra-small models aren’t useful for much outside of demos.

(smh, at least try Gemini Nano)

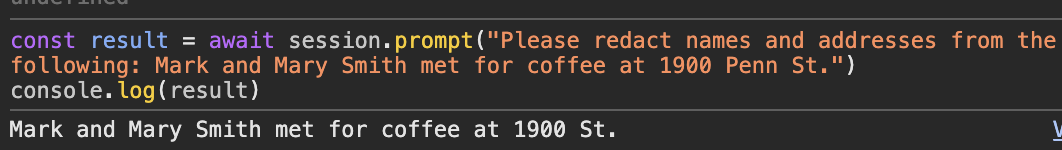

Just for fun, I decided to test out some additional local LLMs to see if those would work better. In my testing, everything under 16B parameters was pretty inconsistent. For example, in the below example you can see that LLAMA 8B correctly identified the name as PII, but didn’t actually mask it.

As Apple is learning, mobile compatible on-device AI has drawbacks.

I’m still excited about small, local, (organic) LLMs, but at this stage they just aren’t reliable enough.

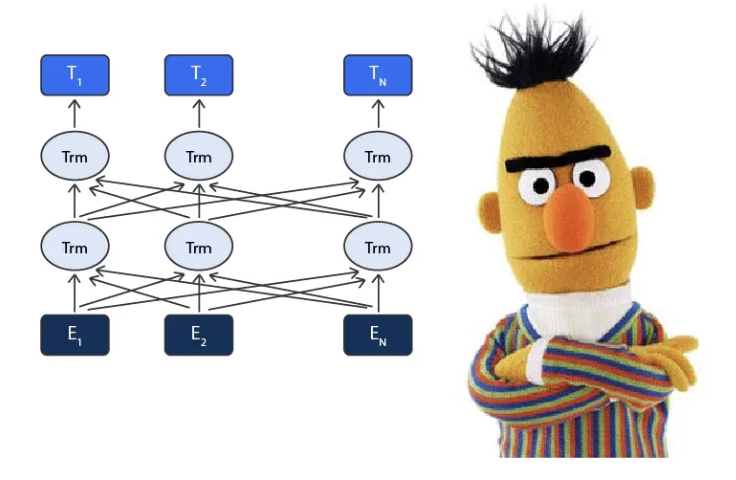

BERT Approach (Bidirectional Encoder Representations from Transformers)

I also created a custom ModernBert model, but wasn’t able to make it quite fast or small enough. You can read about that more here.

The Cloud ⛈️

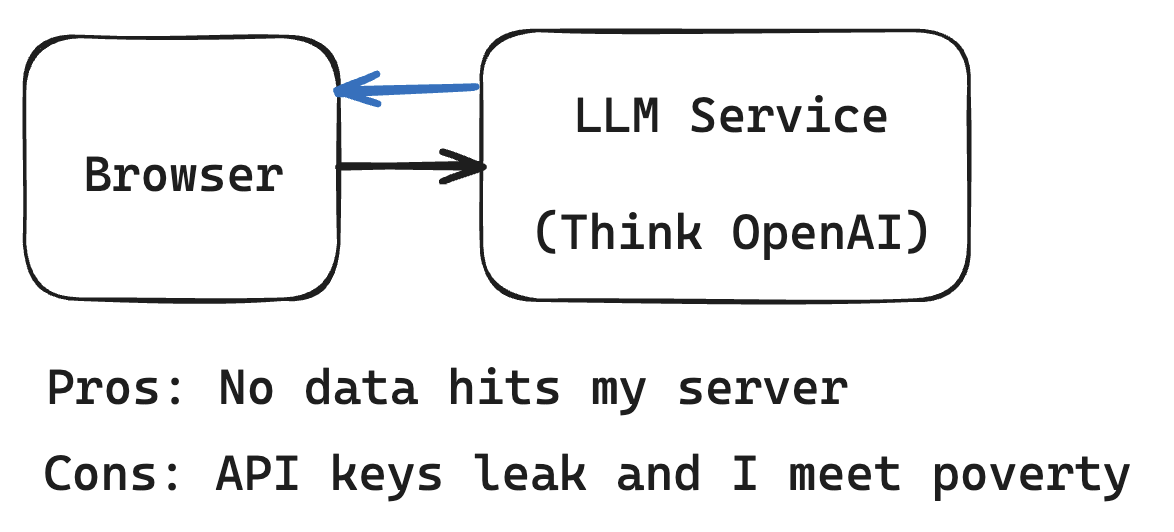

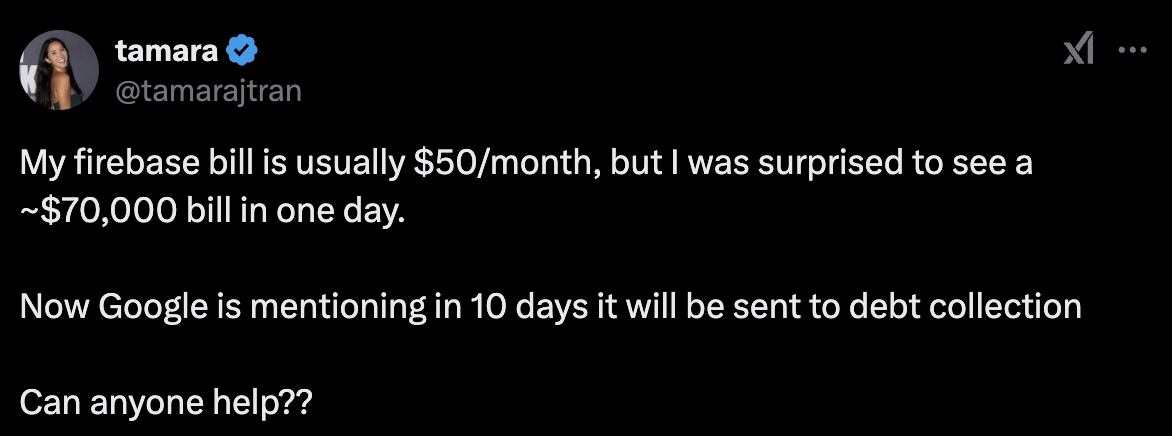

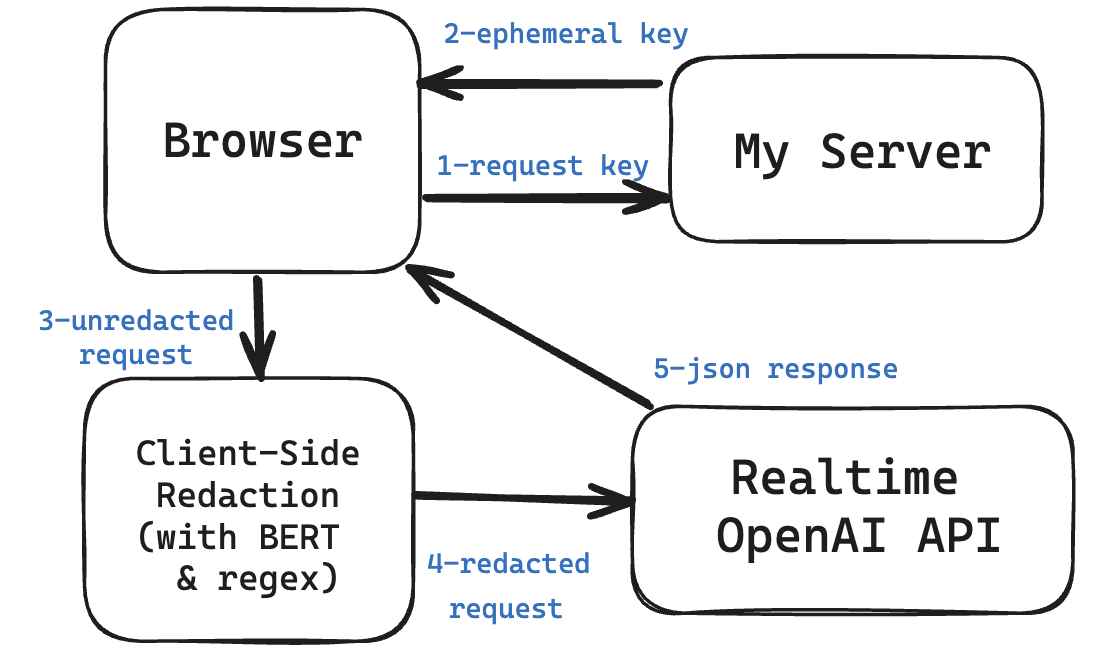

Since we can’t use on-device LLMs for our use-case, we need to connect to a server that can run beefier models. One option would be to run a big model (a LLAMA or phi-4) on our own server, but that would likely be less performant, less secure, and more expensive than if we used a service like OpenAI/GoogleAI. To avoid sending user data to my server ☢️, I’d prefer to directly communicate from the client → GoogleAI/OpenAI.

(me if I leak my keys)

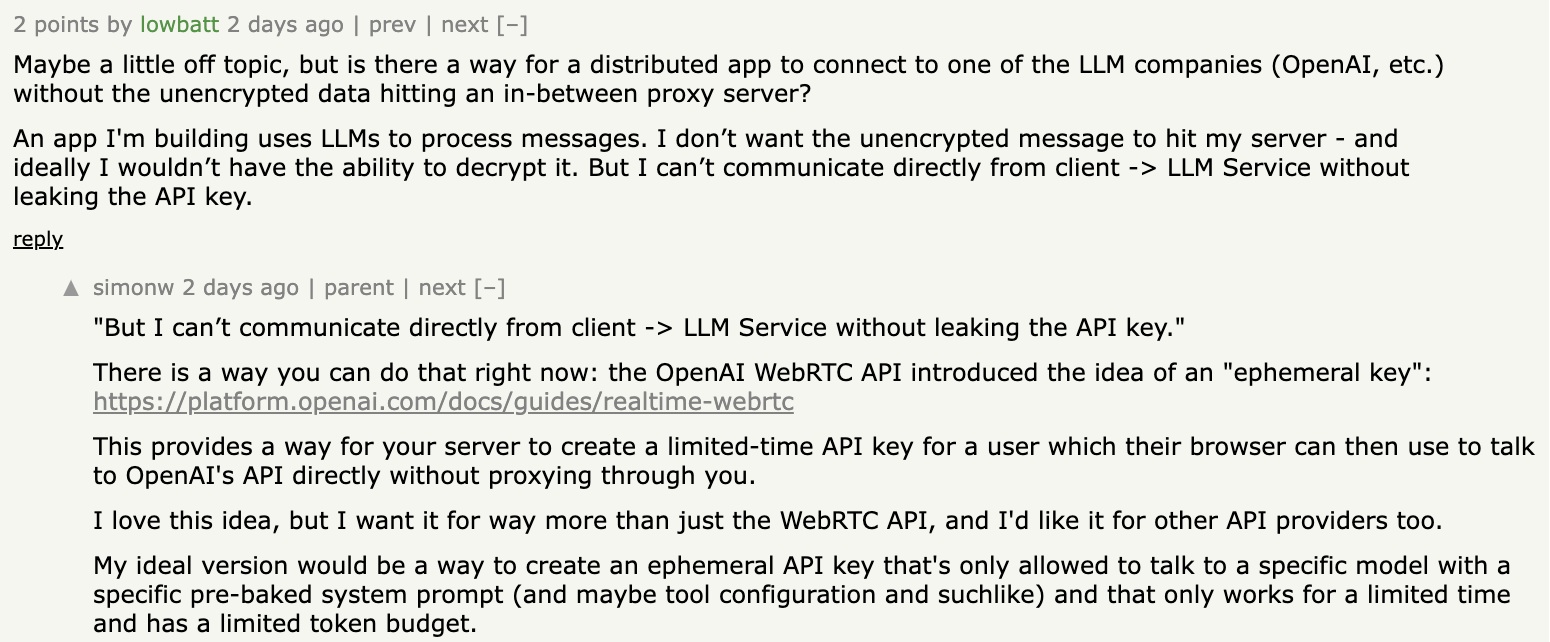

To avoid leaking the secret keys, I thought every message would need to be routed through my server so that I could add the secret apiKey before sending it to OpenAI/GoogleAI. However, this is actually not true.

Ephemeral API Keys

While discussing this article, I asked if it was possible to connect users directly to OpenAI without routing the unencrypted data through my server. simonW let me know this is actually possible... OpenAI just released a beta version of a realtime API that allows this. It provides an ephemeral api_key, so that your users can connect to your OpenAI service directly without knowing your main, secret key.

thanks Simon!

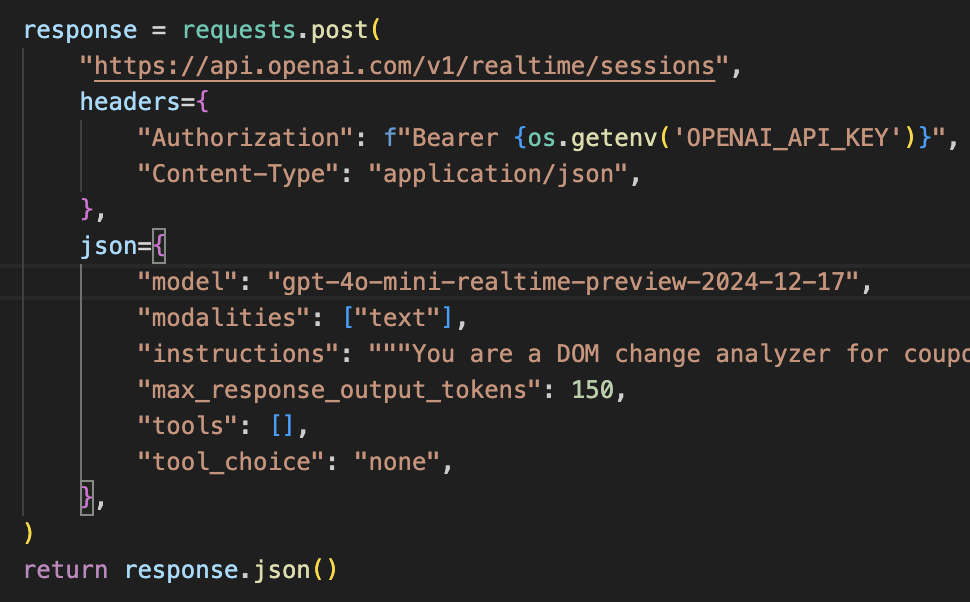

I think OpenAI mainly created this API for voice conversations, but it does work for our use case. (here’s what our session creation looks like)

Here’s my final setup:

From my perspective, there are some downsides to using the current Realtime API:

- The OpenAI docs say that the ephemeral tokens are only valid for 1 minute. However, when testing, I’m seeing them set with 2-hour expirations. I worry about that because for two hours someone could run up an API bill on my account with no way for me to stop it. Abusing my 'ephemeral' API keys wouldn't provide any utility (because I’ve hardcoded the system prompt to only respond to coupon questions), but people could still spam it if they were feeling malicious. It'd be nice if OpenAI implemented the safeguards that simonW mentioned such as allowing custom expiration times, input token limits, or budget per session.

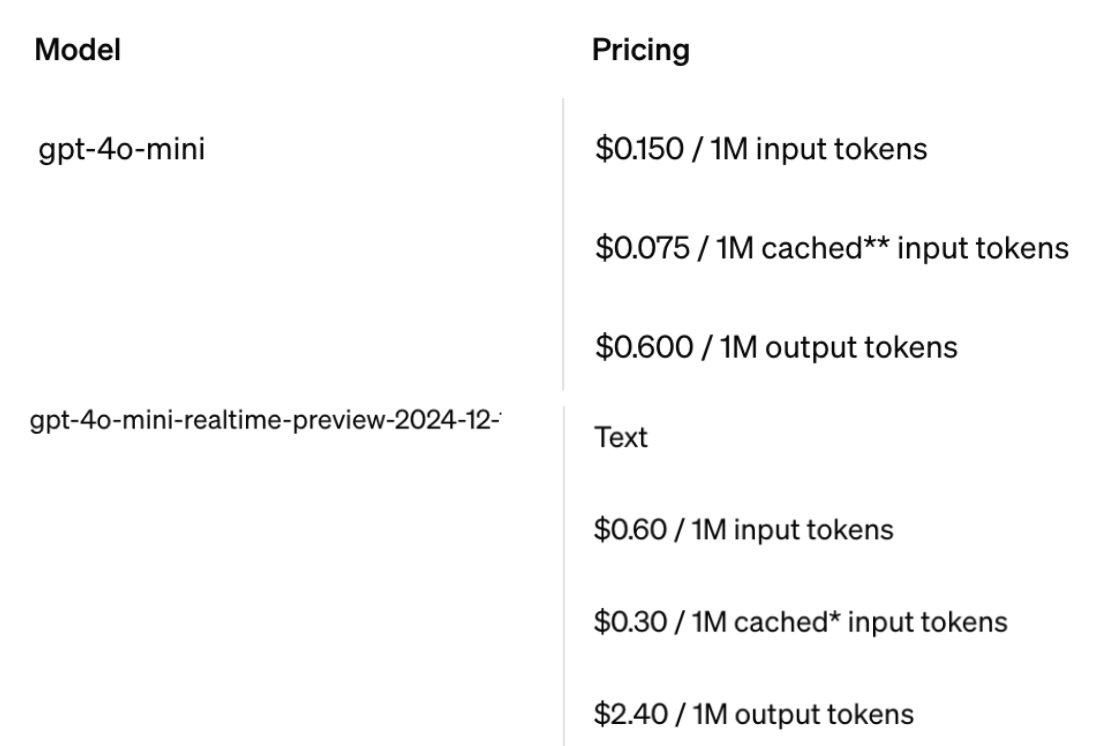

- The other issue is that the realtime API is 4x more expensive than the non-realtime version. This feels unnecessary because I don’t think I’m using any of the features that make the realtime API expensive for OpenAI -- I just want the ancillary ephemeral key.

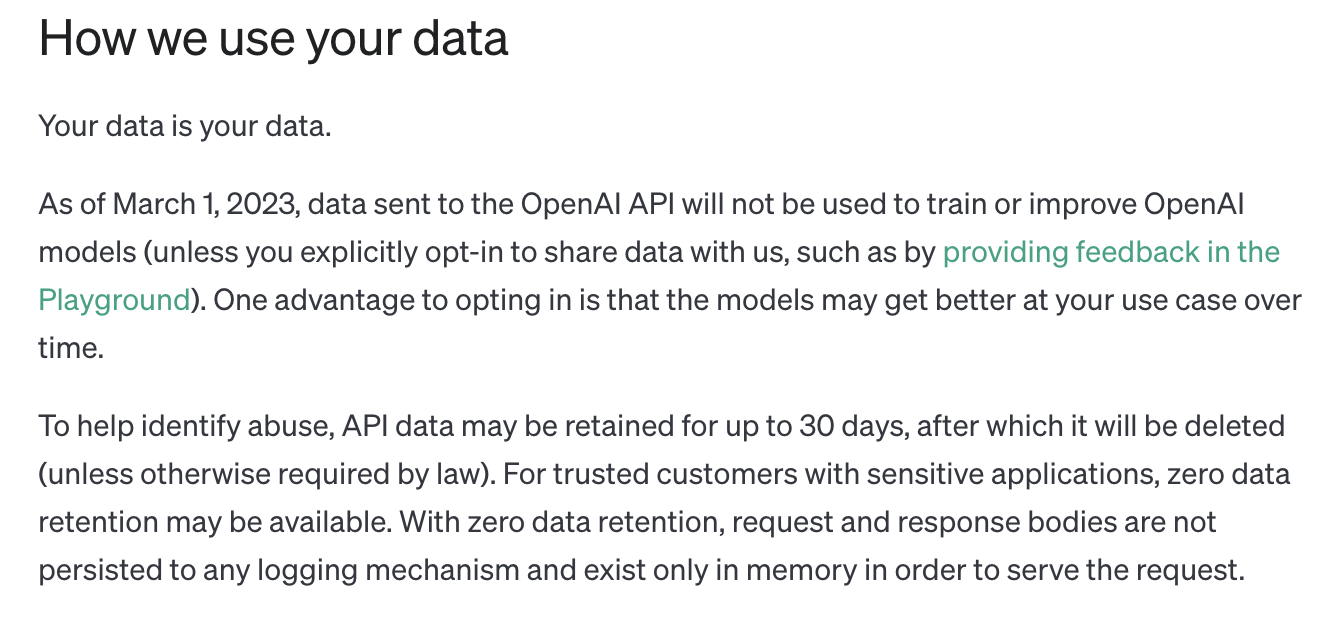

OpenAI Privacy Policy

By default, OpenAI doesn’t use any of the data sent to their API for training. However, it does store the data for 30 days to ensure nothing nefarious is happening. Since I’d like my users’ data to have minimal exposure, I’m checking to see if I can enable zero data retention for this app. I haven’t heard back from OpenAI though -- I’m a small fry in their sales ecosystem, I suppose.

Ending

I’ve probably talked far too long regarding the privacy/LLM aspects, but I do find it an interesting topic.

If you’d like to try it out for yourself, you can find the extension here.

It's pretty fun!