Chatbot Poker Tournament 🤖

Some believe AI Chatbots will exterminate humanity. Others predict they’ll cure disease.

I'm focusing on the most important question – how good are chatbots at poker? To find out, I built an arena for them to battle against each other.

If you want to watch them in action, here’s the video:

Setup

In the video, the AIs are playing completely autonomously. The bots receive screenshots of the board and must choose an action (raise / fold / call / check).

I chose to test OpenAI’s o4-mini, Claude 3.7, and Gemini 2.5 Flash.

My requirements were:

- easily accessible via API

- reasonably fast

- reasonably cheap

I ran this on an M1 Air and spent around $5 in API calls.

Disclaimers

As a caveat, state-of-the-art computer poker is more advanced than this. If you want to cheat at online poker, there are more effective methods. You can read about this here.

I’m just interested in determining how effective general, off-the-shelf reasoning models are at visually interpreting the board and making decisions.

Also, I’m not a poker expert.

Reading the Board

All three chatbots are extremely proficient at recognizing which cards are on the board.

When I ran this test two months ago, the previous-gen AIs (Gemini 2.0 and OpenAI 4o) regularly misread cards. That happens much less frequently now. However, the chatbots still struggle with more nuanced aspects of board reading. For example, Gemini only occasionally recognized the “dealer button”, so often misunderstand the betting order.

Chatbot Poker Weaknesses

- With weak hands, the chatbots would occasionally fold unnecessarily (when they could just check). That’s a pretty devastating flaw. I tried to fix this via prompt, but they kept doing it

- Claude and Gemini seemed to overrate hands made via community cards. For example, if a flop came up Ten, Ten, Jack, they would be overly proud of their Ten pair

- They seemed too tight/conservative and surrendered the small blind too frequently

- o4-mini was quite slow, often taking 35+ seconds to make a decision. The other chatbots were much quicker (in the video I sped things up for watchability)

Chatbot Poker Strengths

- o4-mini was excellent at considering stack and pot sizes when making decisions. When it had the chip lead, it intelligently bullied smaller-stack opponents. It also occasionally tried to get bluffs through. o4-mini was definitely the most talented poker player

- The chatbots all made solid pre-flop decisions. They rarely entered pots with bad starting hands (though that meant their hand strength was often transparent)

- They didn’t “chase” missed draws and folded fairly prudently

Overall Skill Level

I believe even the best chatbot (o4-mini) would lose against humans at microstakes. I’d really like to test this by having it play online for money, but I probably can’t ethically do that. I’d need to create a custom game where people choose to play against it. Maybe I’ll do this later?

AI Trash Talking

To personalize things, I gave each AI a cyborg personality.

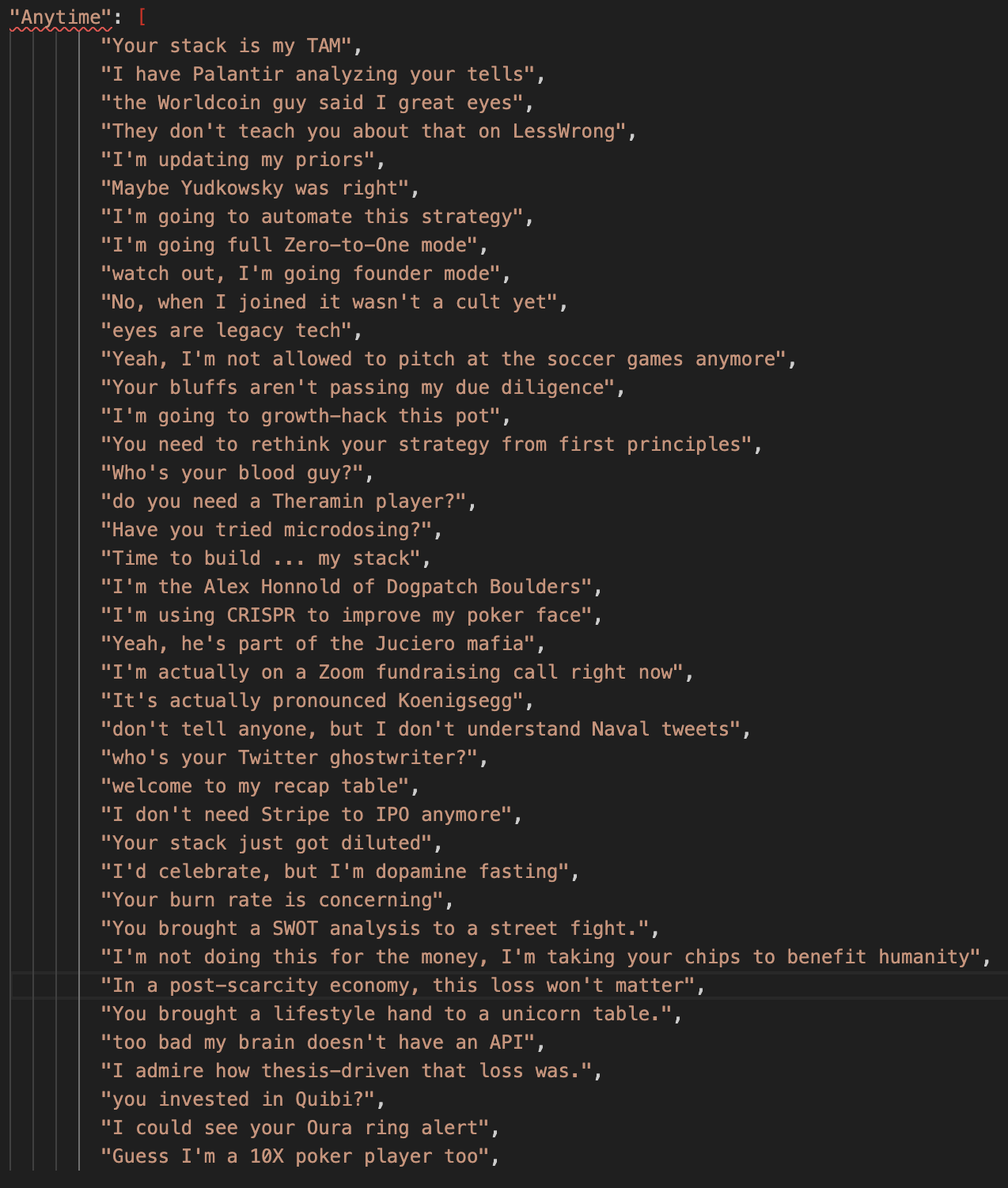

Claude is a cyborg derivatives trader, Gemini is cyborg Sherlock Holmes, and OpenAI is a cyborg VC investor.

I wanted them to trash-talk each other via quippy one-liners.

Gemini and OpenAI wrote pretty solid lines for the Sherlock Holmes character. Probably better than what I could do on short notice. Maybe the old-timey language masks some of the AI awkwardness?

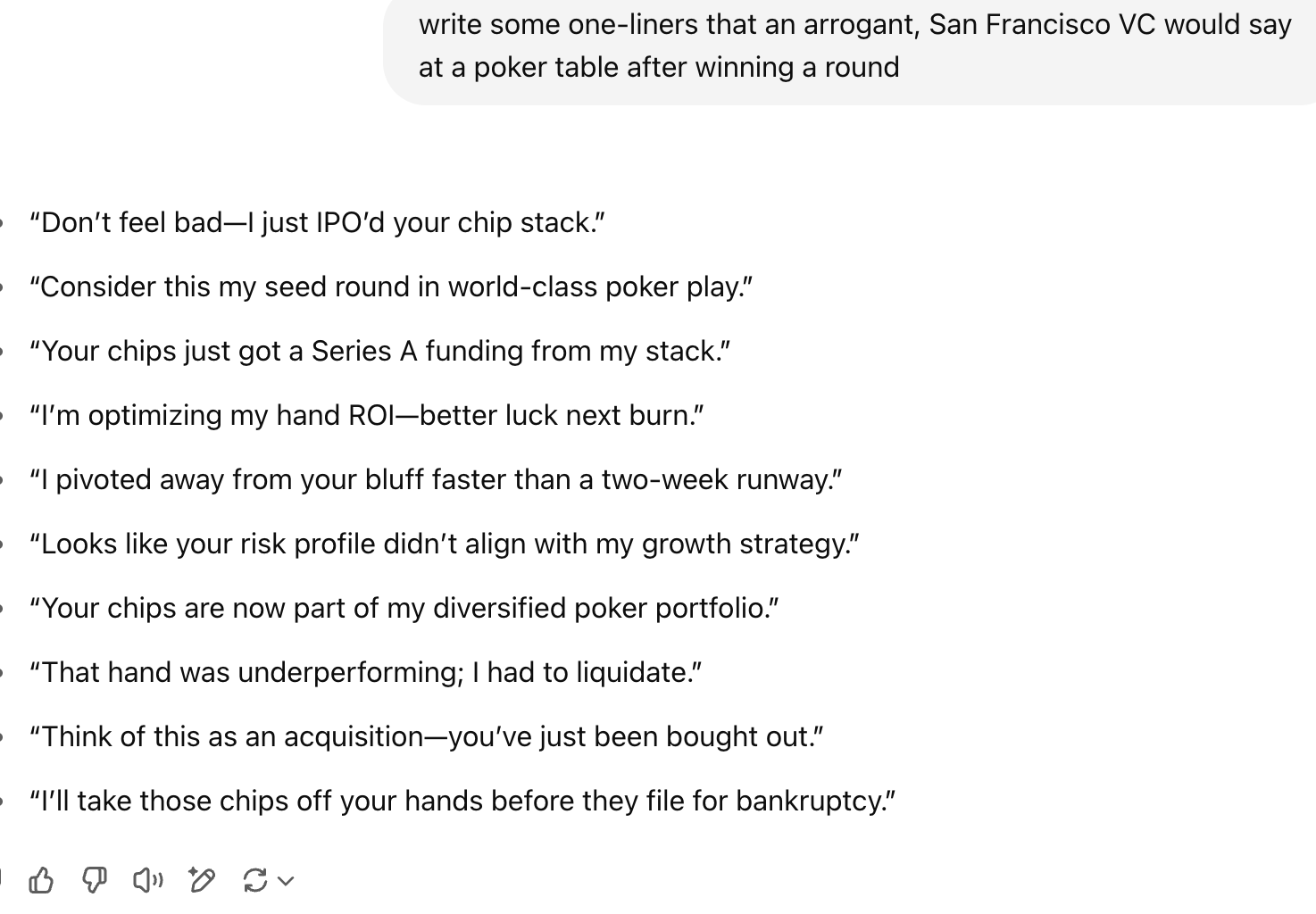

For the Derivatives Trader and VC characters, I didn’t enjoy the AI-generated one-liners quite as much, so ended up writing their lines myself.

Here are some examples from ChatGPT:

Trash talk written by mlamGPT:

Who had the better one-liners? Impossible for me to say. But I still have fun writing things, so I’m not letting AI take over that part yet.

Conclusion

If you have any other models you’d like me to test, just let me know! Lamers@outlook.com